How can an Architect enable optimal clustering to enhance performance for different access paths on a given table?

Create multiple clustering keys for a table.

Create multiple materialized views with different cluster keys.

Create super projections that will automatically create clustering.

Create a clustering key that contains all columns used in the access paths.

According to the SnowPro Advanced: Architect documents and learning resources, the best way to enable optimal clustering to enhance performance for different access paths on a given table is to create multiple materialized views with different cluster keys. A materialized view is a pre-computed result set that is derived from a query on one or more base tables. A materialized view can be clustered by specifying a clustering key, which is a subset of columns or expressions that determines how the data in the materialized view is co-located in micro-partitions. By creating multiple materialized views with different cluster keys, an Architect can optimize the performance of queries that use different access paths on the same base table. For example, if a base table has columns A, B, C, and D, and there are queries that filter on A and B, or on C and D, or on A and C, the Architect can create three materialized views, each with a different cluster key: (A, B), (C, D), and (A, C). This way, each query can leverage the optimal clustering of the corresponding materialized view and achieve faster scan efficiency and better compression.

Snowflake Documentation: Materialized Views

Snowflake Learning: Materialized Views

https://www.snowflake.com/blog/using-materialized-views-to-solve-multi-clustering-performance-problems/

What is a valid object hierarchy when building a Snowflake environment?

Account --> Database --> Schema --> Warehouse

Organization --> Account --> Database --> Schema --> Stage

Account --> Schema > Table --> Stage

Organization --> Account --> Stage --> Table --> View

This is the valid object hierarchy when building a Snowflake environment, according to the Snowflake documentation and the web search results. Snowflake is a cloud data platform that supports various types of objects, such as databases, schemas, tables, views, stages, warehouses, and more. These objects are organized in a hierarchical structure, as follows:

Organization: An organization is the top-level entity that represents a group of Snowflake accounts that are related by business needs or ownership. An organization can have one or more accounts, and can enable features such as cross-account data sharing, billing and usage reporting, and single sign-on across accounts12.

Account: An account is the primary entity that represents a Snowflake customer. An account can have one or more databases, schemas, stages, warehouses, and other objects. An account can also have one or more users, roles, and security integrations. An account is associated with a specific cloud platform, region, and Snowflake edition34.

Database: A database is a logical grouping of schemas. A database can have one or more schemas, and can store structured, semi-structured, or unstructured data. A database can also have properties such as retention time, encryption, and ownership56.

Schema: A schema is a logical grouping of tables, views, stages, and other objects. A schema can have one or more objects, and can define the namespace and access control for the objects. A schema can also have properties such as ownership and default warehouse .

Stage: A stage is a named location that references the files in external or internal storage. A stage can be used to load data into Snowflake tables using the COPY INTO command, or to unload data from Snowflake tables using the COPY INTO LOCATION command. A stage can be created at the account, database, or schema level, and can have properties such as file format, encryption, and credentials .

The other options listed are not valid object hierarchies, because they either omit or misplace some objects in the structure. For example, option A omits the organization level and places the warehouse under the schema level, which is incorrect. Option C omits the organization, account, and stage levels, and places the table under the schema level, which is incorrect. Option D omits the database level and places the stage and table under the account level, which is incorrect.

Snowflake Documentation: Organizations

Snowflake Blog: Introducing Organizations in Snowflake

Snowflake Documentation: Accounts

Snowflake Blog: Understanding Snowflake Account Structures

Snowflake Documentation: Databases

Snowflake Blog: How to Create a Database in Snowflake

[Snowflake Documentation: Schemas]

[Snowflake Blog: How to Create a Schema in Snowflake]

[Snowflake Documentation: Stages]

[Snowflake Blog: How to Use Stages in Snowflake]

A group of order_admin users must delete records older than 5 years from an orders table without having DELETE privileges. The order_manager role has DELETE privileges.

How can this be achieved?

Create a stored procedure with caller’s rights.

Create a stored procedure with selectable caller/owner rights.

Create a stored procedure that runs with owner’s rights and grant usage to order_admin.

This is not possible without DELETE privileges.

Snowflake stored procedures can execute using either caller’s rights or owner’s rights. When a procedure runs with owner’s rights, it executes using the privileges of the role that owns the procedure—not the role invoking it.

By creating a stored procedure owned by the order_manager role (which has DELETE privileges) and defining it to run with owner’s rights, the Architect can encapsulate the business logic (delete records older than 5 years) securely (Answer C). Granting only USAGE on the procedure to order_admin allows them to execute the cleanup without directly holding DELETE privileges on the table.

Caller’s rights would fail because order_admin lacks DELETE privileges. Snowflake does not support runtime selection of execution rights. This is a classic SnowPro Architect security pattern: least privilege access combined with controlled procedural execution.

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

Use a materialized view on top of an external table against the S3 bucket in AWS Singapore.

Use an external table against the S3 bucket in AWS Singapore and copy the data into transient tables.

Copy the data between providers from S3 to Azure Blob storage to collocate, then use Snowpipe for data ingestion.

Use AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then use an external table against the Blob storage.

Option A is the best design to meet the requirements because it uses a materialized view on top of an external table against the S3 bucket in AWS Singapore. A materialized view is a database object that contains the results of a query and can be refreshed periodically to reflect changes in the underlying data1. An external table is a table that references data files stored in a cloud storage service, such as Amazon S32. By using a materialized view on top of an external table, the company can provide access to frequently changing data, keep egress costs to a minimum, and maintain low latency. This is because the materialized view will cache the query results in Snowflake, reducing the need to access the external data files and incur network charges. The materialized view will also improve the query performance by avoiding scanning the external data files every time. The materialized view can be refreshed on a schedule or on demand to capture the changes in the external data files1.

Option B is not the best design because it uses an external table against the S3 bucket in AWS Singapore and copies the data into transient tables. A transient table is a tablethat is not subject to the Time Travel and Fail-safe features of Snowflake, and is automatically purged after a period of time3. By using an external table and copying the data into transient tables, the company will incur more egress costs and operational overhead than using a materialized view. This is because the external table will access the external data files every time a query is executed, and the copy operation will also transfer data from S3 to Snowflake. The transient tables will also consume more storage space in Snowflake and require manual maintenance to ensure they are up to date.

Option C is not the best design because it copies the data between providers from S3 to Azure Blob storage to collocate, then uses Snowpipe for data ingestion. Snowpipe is a service that automates the loading of data from external sources into Snowflake tables4. By copying the data between providers, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. Snowpipe will also add another layer of processing and storage in Snowflake, which may not be necessary if the external data files are already in a queryable format.

Option D is not the best design because it uses AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then uses an external table against the Blob storage. AWS Transfer Family is a service that enables secure and seamless transfer of files over SFTP, FTPS, and FTP to and from Amazon S3 or Amazon EFS5. By using AWS Transfer Family, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. The external table will also access the external data files every time a query is executed, which may affect the query performance.

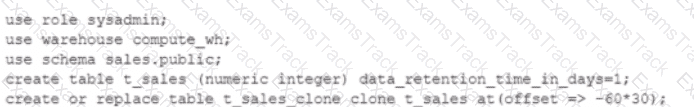

A user is executing the following command sequentially within a timeframe of 10 minutes from start to finish:

What would be the output of this query?

Table T_SALES_CLONE successfully created.

Time Travel data is not available for table T_SALES.

The offset -> is not a valid clause in the clone operation.

Syntax error line 1 at position 58 unexpected 'at’.

The query is executing a clone operation on an existing tablet_saleswith an offset to account for the retention time. The syntax used is correct for cloning a table in Snowflake, and the use of theat(offset => -60*30)clause is valid. This specifies that the clone should be based on the state of the table 30 minutes prior (60 seconds * 30). Assuming the tablet_salesexists and has been modified within the last 30 minutes, and considering thedata_retention_time_in_daysis set to 1 day (which enables time travel queries for the past 24 hours), the tablet_sales_clonewould be successfully created based on the state oft_sales30 minutes before the clone command was issued.

A company's Architect needs to find an efficient way to get data from an external partner, who is also a Snowflake user. The current solution is based on daily JSON extracts that are placed on an FTP server and uploaded to Snowflake manually. The files are changed several times each month, and the ingestion process needs to be adapted to accommodate these changes.

What would be the MOST efficient solution?

Ask the partner to create a share and add the company's account.

Ask the partner to use the data lake export feature and place the data into cloud storage where Snowflake can natively ingest it (schema-on-read).

Keep the current structure but request that the partner stop changing files, instead only appending new files.

Ask the partner to set up a Snowflake reader account and use that account to get the data for ingestion.

The most efficient solution is to ask the partner to create a share and add the company’s account (Option A). This way, the company can access the live data from the partner without any data movement or manual intervention. Snowflake’s secure data sharing feature allows data providers to share selected objects in a database with other Snowflake accounts. The shared data is read-only and does not incur any storage or compute costs for the data consumers. The data consumers can query the shared data directly or create local copies of the shared objects in their own databases. Option B is not efficient because it involves using the data lake export feature, which is intended for exporting data from Snowflake to an external data lake, not for importing data from another Snowflake account. The data lake export feature also requires the data provider to create an external stage on cloud storage and use the COPY INTO

Introduction to Secure Data Sharing | Snowflake Documentation

Data Lake Export Public Preview Is Now Available on Snowflake | Snowflake Blog

Managing Reader Accounts | Snowflake Documentation

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

Useone stream and one task.

Useone stream and two tasks.

Usetwo streams and one task.

Usetwo streams and two tasks.

The most performant design for processing changed records, considering the need to both update records in thef_ordersfact table and load changes into theorder_repairstable, is to use one stream and two tasks. The stream will monitor changes in the orders table, capturing both inserts and updates. The first task would apply these changes to thef_ordersfact table, ensuring all dimensions are accurately represented. The second task would use the same stream to insert relevant changes into theorder_repairstable, which is critical for the Accounting department's monthly review. This method ensures efficient processing by minimizing the overhead of managing multiple streams and synchronizing between them, while also allowing specific tasks to optimize for their target operations.

Which system functions does Snowflake provide to monitor clustering information within a table (Choose two.)

SYSTEM$CLUSTERING_INFORMATION

SYSTEM$CLUSTERING_USAGE

SYSTEM$CLUSTERING_DEPTH

SYSTEM$CLUSTERING_KEYS

SYSTEM$CLUSTERING_PERCENT

According to the Snowflake documentation, these two system functions are provided by Snowflake to monitor clustering information within a table. A system function is a type of function that allows executing actions or returning information about the system. A clustering key is a feature that allows organizing data across micro-partitions based on one or more columns in the table. Clustering can improve query performance by reducing the number of files to scan.

SYSTEM$CLUSTERING_INFORMATION is a system function that returns clustering information, including average clustering depth, for a table based on one or more columns in the table. The function takes a table name and an optional column name or expression as arguments, and returns a JSON string with the clustering information. The clustering information includes the cluster by keys, the total partition count, the total constant partition count, the average overlaps, and the average depth1.

SYSTEM$CLUSTERING_DEPTH is a system function that returns the clustering depth for a table based on one or more columns in the table. The function takes a table name and an optional column name or expression as arguments, and returns an integer value with the clustering depth. The clustering depth is the maximum number of overlapping micro-partitions for any micro-partition in the table. A lower clustering depth indicates a better clustering2.

SYSTEM$CLUSTERING_INFORMATION | Snowflake Documentation

SYSTEM$CLUSTERING_DEPTH | Snowflake Documentation

What transformations are supported in the below SQL statement? (Select THREE).

CREATE PIPE ... AS COPY ... FROM (...)

Data can be filtered by an optional where clause.

Columns can be reordered.

Columns can be omitted.

Type casts are supported.

Incoming data can be joined with other tables.

The ON ERROR - ABORT statement command can be used.

The SQL statement is a command for creating a pipe in Snowflake, which is an object that defines the COPY INTO

|

PDF + Testing Engine

|

|---|

|

$49.5 |

|

Testing Engine

|

|---|

|

$37.5 |

|

PDF (Q&A)

|

|---|

|

$31.5 |

Snowflake Free Exams |

|---|

|

Copyright © 2026 Examstrack. All Rights Reserved

TESTED 21 Feb 2026