34 of 55.

A data engineer is investigating a Spark cluster that is experiencing underutilization during scheduled batch jobs.

After checking the Spark logs, they noticed that tasks are often getting killed due to timeout errors, and there are several warnings about insufficient resources in the logs.

Which action should the engineer take to resolve the underutilization issue?

25 of 55.

A Data Analyst is working on employees_df and needs to add a new column where a 10% tax is calculated on the salary.

Additionally, the DataFrame contains the column age, which is not needed.

Which code fragment adds the tax column and removes the age column?

A data scientist at a financial services company is working with a Spark DataFrame containing transaction records. The DataFrame has millions of rows and includes columns for transaction_id, account_number, transaction_amount, and timestamp. Due to an issue with the source system, some transactions were accidentally recorded multiple times with identical information across all fields. The data scientist needs to remove rows with duplicates across all fields to ensure accurate financial reporting.

Which approach should the data scientist use to deduplicate the orders using PySpark?

Which command overwrites an existing JSON file when writing a DataFrame?

What is the benefit of Adaptive Query Execution (AQE)?

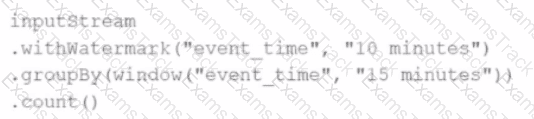

Given this code:

.withWatermark("event_time", "10 minutes")

.groupBy(window("event_time", "15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

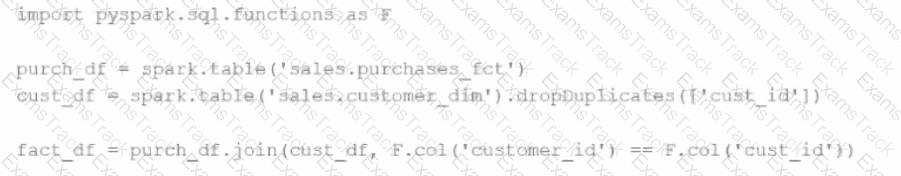

A developer is trying to join two tables, sales.purchases_fct and sales.customer_dim, using the following code:

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'))

The developer has discovered that customers in the purchases_fct table that do not exist in the customer_dim table are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

A data engineer needs to persist a file-based data source to a specific location. However, by default, Spark writes to the warehouse directory (e.g., /user/hive/warehouse). To override this, the engineer must explicitly define the file path.

Which line of code ensures the data is saved to a specific location?

Options:

32 of 55.

A developer is creating a Spark application that performs multiple DataFrame transformations and actions. The developer wants to maintain optimal performance by properly managing the SparkSession.

How should the developer handle the SparkSession throughout the application?

26 of 55.

A data scientist at an e-commerce company is working with user data obtained from its subscriber database and has stored the data in a DataFrame df_user.

Before further processing, the data scientist wants to create another DataFrame df_user_non_pii and store only the non-PII columns.

The PII columns in df_user are name, email, and birthdate.

Which code snippet can be used to meet this requirement?

|

PDF + Testing Engine

|

|---|

|

$49.5 |

|

Testing Engine

|

|---|

|

$37.5 |

|

PDF (Q&A)

|

|---|

|

$31.5 |

Databricks Free Exams |

|---|

|