A company has an existing Amazon SageMaker AI model (v1) on a production endpoint. The company develops a new model version (v2) and needs to test v2 in production before substituting v2 for v1.

The company needs to minimize the risk of v2 generating incorrect output in production and must prevent any disruption of production traffic during the change.

Which solution will meet these requirements?

A company is using Amazon SageMaker and millions of files to train an ML model. Each file is several megabytes in size. The files are stored in an Amazon S3 bucket. The company needs to improve training performance.

Which solution will meet these requirements in the LEAST amount of time?

A company has trained an ML model in Amazon SageMaker. The company needs to host the model to provide inferences in a production environment.

The model must be highly available and must respond with minimum latency. The size of each request will be between 1 KB and 3 MB. The model will receive unpredictable bursts of requests during the day. The inferences must adapt proportionally to the changes in demand.

How should the company deploy the model into production to meet these requirements?

An ML engineer is analyzing a classification dataset before training a model in Amazon SageMaker AI. The ML engineer suspects that the dataset has a significant imbalance between class labels that could lead to biased model predictions. To confirm class imbalance, the ML engineer needs to select an appropriate pre-training bias metric.

Which metric will meet this requirement?

A company needs to deploy a custom-trained classification ML model on AWS. The model must make near real-time predictions with low latency and must handle variable request volumes.

Which solution will meet these requirements?

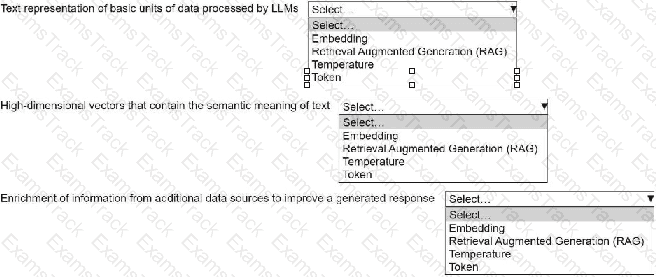

An ML engineer is building a generative AI application on Amazon Bedrock by using large language models (LLMs).

Select the correct generative AI term from the following list for each description. Each term should be selected one time or not at all. (Select three.)

• Embedding

• Retrieval Augmented Generation (RAG)

• Temperature

• Token

A company is developing an ML model to predict customer satisfaction. The company needs to use survey feedback and the past satisfaction level of customers to predict the future satisfaction level of customers.

The dataset includes a column named Feedback that contains long text responses. The dataset also includes a column named Satisfaction Level that contains three distinct values for past customer satisfaction: High, Medium, and Low. The company must apply encoding methods to transform the data in each column.

Which solution will meet these requirements?

An ML engineer uses one ML framework to train multiple ML models. The ML engineer needs to optimize inference costs and host the models on Amazon SageMaker AI.

Which solution will meet these requirements MOST cost-effectively?

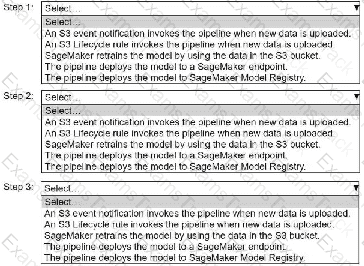

A company wants to host an ML model on Amazon SageMaker. An ML engineer is configuring a continuous integration and continuous delivery (Cl/CD) pipeline in AWS CodePipeline to deploy the model. The pipeline must run automatically when new training data for the model is uploaded to an Amazon S3 bucket.

Select and order the pipeline's correct steps from the following list. Each step should be selected one time or not at all. (Select and order three.)

• An S3 event notification invokes the pipeline when new data is uploaded.

• S3 Lifecycle rule invokes the pipeline when new data is uploaded.

• SageMaker retrains the model by using the data in the S3 bucket.

• The pipeline deploys the model to a SageMaker endpoint.

• The pipeline deploys the model to SageMaker Model Registry.

An ML engineer wants to deploy a workflow that processes streaming IoT sensor data and periodically retrains ML models. The most recent model versions must be deployed to production.

Which service will meet these requirements?

|

PDF + Testing Engine

|

|---|

|

$49.5 |

|

Testing Engine

|

|---|

|

$37.5 |

|

PDF (Q&A)

|

|---|

|

$31.5 |

Amazon Web Services Free Exams |

|---|

|