You want to connect with username and password to a secured Kafka cluster that has SSL encryption.

Which properties must your client include?

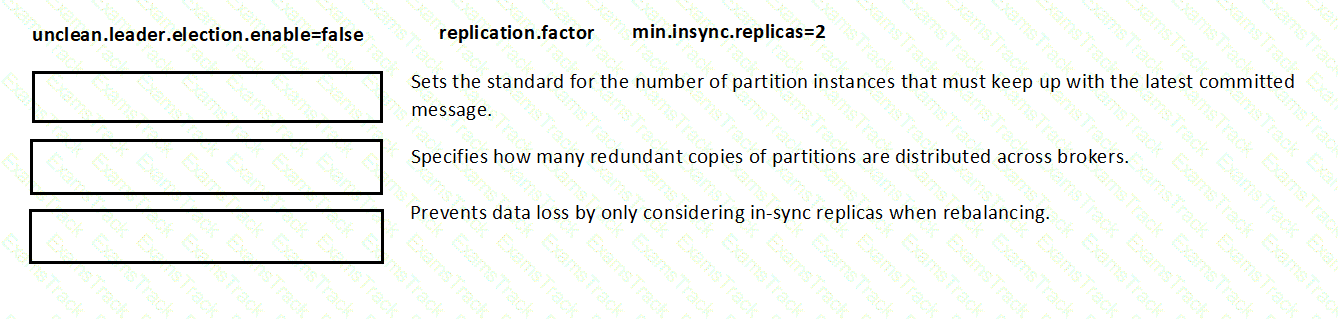

Match the topic configuration setting with the reason the setting affects topic durability.

(You are given settings like unclean.leader.election.enable=false, replication.factor, min.insync.replicas=2)

(You have a topic with four partitions. The application reading this topic is using a consumer group with two consumers.

Throughput is smoothly distributed among partitions, but application lag is increasing.

Application monitoring shows that message processing is consuming all available CPU resources.

Which action should you take to resolve this issue?)

What are three built-in abstractions in the Kafka Streams DSL?

(Select three.)

Your application is consuming from a topic configured with a deserializer.

It needs to be resilient to badly formatted records ("poison pills"). You surround the poll() call with a try/catch for RecordDeserializationException.

You need to log the bad record, skip it, and continue processing.

Which action should you take in the catch block?

Which is true about topic compaction?

Which two statements are correct when assigning partitions to the consumers in a consumer group using the assign() API?

(Select two.)

(You are designing a stream pipeline to monitor the real-time location of GPS trackers, where historical location data is not required.

Each event has:

• Key: trackerId

• Value: latitude, longitude

You need to ensure that the latest location for each tracker is always retained in the Kafka topic.

Which topic configuration parameter should you set?)

You have a Kafka client application that has real-time processing requirements.

Which Kafka metric should you monitor?

(A stream processing application tracks user activity in online shopping carts, including items added, removed, and ordered throughout the day for each user.

You need to capture data to identify possible periods of user inactivity.

Which type of Kafka Streams window should you use?)

|

PDF + Testing Engine

|

|---|

|

$49.5 |

|

Testing Engine

|

|---|

|

$37.5 |

|

PDF (Q&A)

|

|---|

|

$31.5 |

Confluent Free Exams |

|---|

|